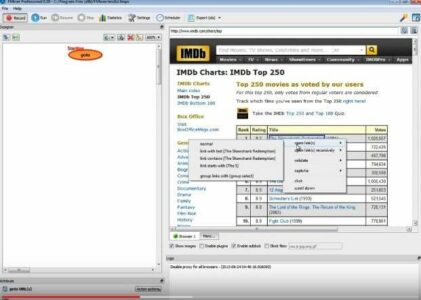

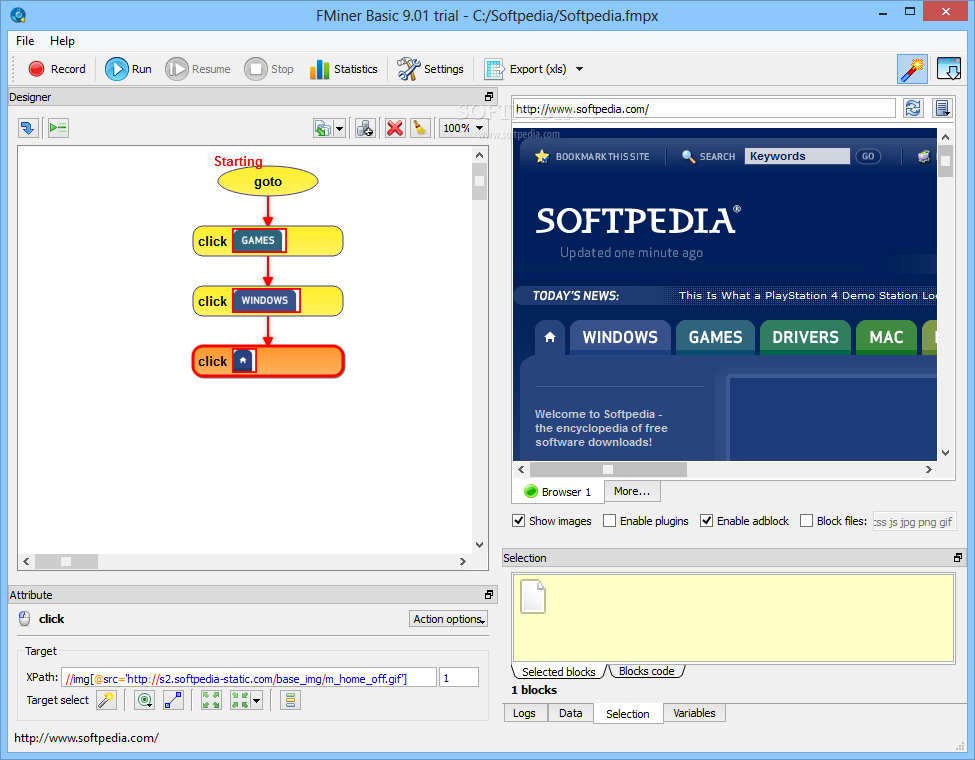

Approaches/tools for web scrapingĪ) Browser extension-based utilities. There are a couple of browser-based utilities like OutwitHub for Firefox and Web Scraper for Chrome.ī) Third-party tools - Tools like FMiner, Octparse, Dexi.io, etc.Ĭ) BeautifulSoup package - This python package comes with inbuilt functions for parsing an HTML page and extract the data by traversing the HTML tree using tags.ĭ) Scrapy - An opensource platform for scraping and developing advanced web crawlers.

When you require a sizable and repeatable extraction of data for your project, usually most of the readymade tools run into some roadblocks. With BeautifulSoup/Scrapy we always have the flexibility with tuning the utility according to our needs. Also, another advantage with writing your own tools is that one could incorporate a lot of data cleaning/pre-processing within this utility itself so that the first cut of the extracted data is moderately clean. In the subsequent sections, we will discuss in detail about using BeautifulSoup package for scraping. The latest version available for download is Beautiful Soup 4.0 works best with Python 3 and above.īeautiful Soup - Python-based approachīeautiful Soup is a Python library for extracting data from HTML and XML files. BS4 can be installed like any other standard python library using pip-install beautifulsoup4. For HTML, BS4 programs can work with the standard HTML parser available within Python. But it can support other parser libraries like lxml which needs to be installed separately. How does it work? - ExampleĪ) In this example we are using the website, a tourism website. Using the next steps, we will extract data from this webpageī) Here we will extract every place listed in the page and extract the description and the user reviews posted for every pageĬ) The function get_html_to_soup accepts a generic URL as input and returns the beautiful soup object.

0 kommentar(er)

0 kommentar(er)